Notebooks

Premium

Trends

BioTuring

Computational methods have been proposed to leverage spatially resolved transcriptomic data, pinpointing genes with spatial expression patterns and delineating tissue domains. However, existing approaches fall short in uniformly quantifying spatially variable genes (SVGs). Moreover, from a methodological viewpoint, while SVGs are naturally associated with depicting spatial domains, they are technically dissociated in most methods.

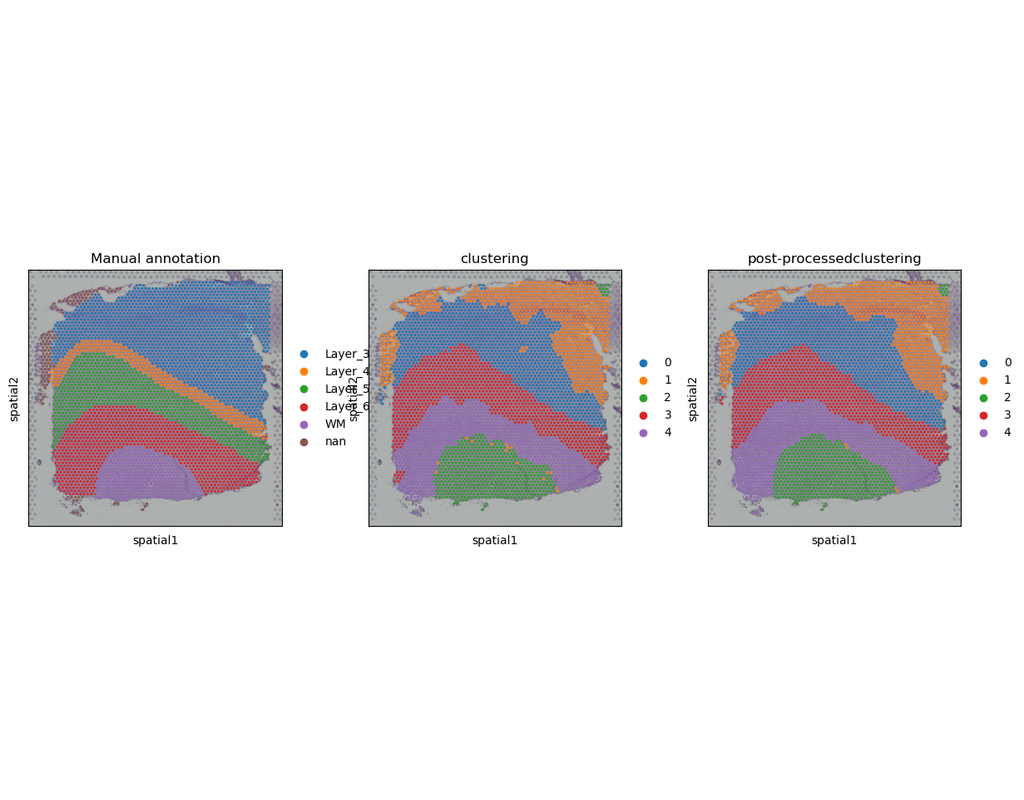

Here, PROST is a flexible framework to quantify gene spatial expression patterns and detect spatial tissue domains using spatially resolved transcriptomics with various resolutions. PROST consists of two independent workflows: PROST Index (PI) and PROST Neural Network (PNN).

Using PROST you can do:

* Quantitative identification of spatial patterns of gene expression changes by the proposed PROST Index (PI).

* Unsupervised identification of spatial tissue domains using a PROST Neural Network (PNN) via a self-attention mechanism.